Creating a Garden Defense System Using Tflite

My Mom has a garden and chickens. Around the fenced-in garden is a parameter of bark and shrubs. The chickens love foraging in the bark and can easily kick and scratch several cubic feet of it out into the field within minutes. When they free-ranged, my Mom always kept an eye on them, which often limited the time she let them out: until now.

I told my Mom that I would devise a solution to the chicken problem for Mother’s Day. I thought I would take the opportunity to develop an object detection-based solution, as I had never worked with object detection tools before.

Source code here: https://github.com/twofingerrightclick/GardenDefenseSystem

Getting Started with Xamarin.Forms

The Garden Defense System is a Xamarin.Forms app that uses an object detection model exported from Microsoft’s Custom Vision service to detect chicken intruders.

I needed to figure out three other things: how to automate taking photos, how to process them, and how to make implementing this project take roughly 10 hrs (implementing tflite, unfortunately, would end up turning this into a more extended project). For the first two, I took an easy route with Microsoft’s Custom Vision which makes training models for tasks like this very easy. For the third, the simplest solution would be to use a Linux box like a Raspberry Pi, but I am using all the ones I have, and they are in short supply (and alternatives would require troubleshooting OS things, etc.). I thought about using old Android phones but felt daunted by having to figure out how to automate photo taking with the restrictions of Android. I realized I could use an auto clicker app with Android’s Accessibility API to automate user input to take photos!

Once confident that I wouldn’t need to read into Android APIs, I created a single page Xamarin.Forms app that takes a photo using the Xamarin.Essentials Media Picker, optimizes it, posts it to Custom Vision’s prediction API endpoint, and then either alerts or does nothing based on the prediction results.

The Garden Defense System in Action

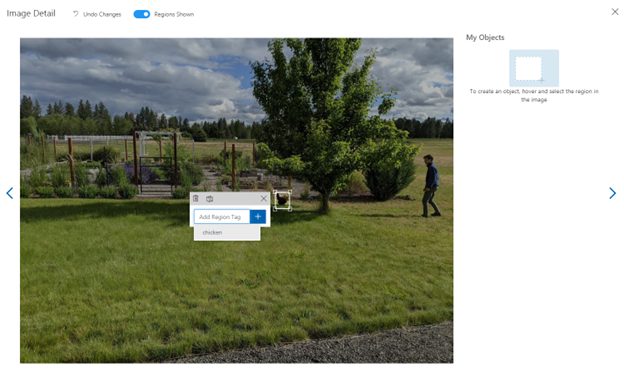

I took around 150 photos of the chickens at a similar distance to how the camera would be placed to watch for them. I then tagged all the shots in Custom Vision and started a training run.

After about an hour of training, the model appeared proficient at detecting the chickens.

I then deployed it to a spare Android phone with a cracked screen and mounted the phone on a window with a suction cup mount.

As part of the minimum viable solution, the app plays an alarm when it spots a chicken. It runs from morning till sunset when the chickens go to bed (the app has sunset times).

I am thinking of ways to automate deterring the chickens when they are spotted, but my Mom has been thrilled with the current implementation all the same. I may not need to automate deterring the chickens, as, after a few days, the dog started to associate the alarm with the chickens. His barking from the window is enough for the chickens to start to leave, as they expect someone is coming out after them.

Using TensorFlow Lite instead of Custom Vision API

The Custom Vision API costs money to use, and my parents’ home internet connection is not reliable. So, I wanted to use TensorFlow Lite to process the images instead. There is a wrapper called Xamarin.TensorFlow.Lite that is a part of Xamarin.GooglePlayServicesComponents. There are several blogs about using it for image classification, but I couldn’t find any blogs for object detection. I had some difficulty getting the casting right for the input and output objects. Here is a GitHub issue I opened where I documented my struggle with this.

I summarize the essentials of using Xamarin.TensorFlow.Lite to process an image with an object detection model below.

Inputs and Outputs for Tflite Models

The input and outputs for Tensorflow Lite models are essentially the same regardless of language (see tf docs), but it isn’t easy with all the casting required for doing it in a Xamarin app.

You want to use Interpreter.RunForMultipleInputsOutputs(). Input is the same as image classification (a ByteBuffer of the image) but placed in an Object array.

The output depends on your model, of course. Use a tool like Netron to see the types of your output tensors and create arrays of the types for your output tensors based on the dimensions of the output tensors. Put each array for an output tensor in a map with keys based on the respect output tensor indexes. Input is the same as for image classification but placed in an array.

My model from Custom Vision had the following output tensor specs:

- detected_boxes The detected bounding boxes. Each bounding box is represented as [x1, y1, x2, y2] >where (x1, y1) and (x2, y2) are the coordinates of box corners.

- detected_scores Probability for each detected box.

- detected_classes The class index for the detected boxes.

To calculate the sizes of the output tensors, I use the number of detections that the model outputs:

// GardenDefenseSystem.Droid.TensorflowObjectDetector

int numDetections = Interpreter

.GetOutputTensor(detectedClassesOutputIndex)

.NumElements(); //64

Code language: C# (cs)And use that value to construct the arrays:

_OutputBoxes = CreateJaggedArray<float>(numDetections, 4);

_OutputClasses = new long[numDetections];

_OutputScores = new float[numDetections];

Code language: C# (cs)Then construct the map:

int detectedBoxesOutputIndex = Interpreter.GetOutputIndex("detected_boxes"); // 0

int detectedClassesOutputIndex = Interpreter.GetOutputIndex("detected_classes"); // 1

int detectedScoresOutputIndex = Interpreter.GetOutputIndex("detected_scores"); // 2

var javaizedOutputBoxes = OutputBoxes;

var javaizedOutputClasses = OutputClasses;

var javaizedOutputScores = OutputScores;

Java.Lang.Object[] inputArray = { imageByteBuffer };

var outputMap = new Dictionary<Java.Lang.Integer, Java.Lang.Object>();

outputMap.Add(new Java.Lang.Integer(detectedBoxesOutputIndex), javaizedOutputBoxes);

outputMap.Add(new Java.Lang.Integer(detectedClassesOutputIndex), javaizedOutputClasses);

outputMap.Add(new Java.Lang.Integer(detectedScoresOutputIndex), javaizedOutputScores);

Interpreter.RunForMultipleInputsOutputs(inputArray, outputMap);

OutputBoxes = javaizedOutputBoxes;

OutputClasses = javaizedOutputClasses;

OutputScores = javaizedOutputScores;

Code language: C# (cs)Note that there is some casting done by using properties with backing fields for the Outputs:

// _OutputScores: array of shape [NUM_DETECTIONS]

// contains the scores of detected boxes

private float[] _OutputScores;

public Java.Lang.Object OutputScores

{

get => Java.Lang.Object.FromArray(_OutputScores);

set => _OutputScores = value.ToArray<float>();

}

Code language: C# (cs)Wrapping Up Xamarian.Forms and Tflite

I hope those examples are enough, but if not, here is another example from another dev. I also answered a StackOverflow question about outputs, so feel free to comment there if you have difficulties so that others can help or profit from your efforts.

Want More?

Curious about machine learning? Check out senior software engineer Brian’s intro to Azure Machine Learning presentation.

Does Your Organization Need a Custom Solution?

Let’s chat about how we can help you achieve excellence on your next project!